¶ Docker Networking

¶ Something need to know

¶ 1. Linux Network Namespace

The OS shares a single set of network interfaces and routing table entries. Network namespace can change the fundamental assumption. With network namespaces, you can have different and separate instances of network interfaces and routing tables that operate independent of each other. So how to managing network namespace on Linux?

- create and list network namespace

# ip netns add ns1

# ip netns add ns2

# ip netns list

ns2

ns1

- delete network namespace

# ip netns delete ns1

- execute CMD within network namespace

# Usage syntax: ip netns exec <network namespace name> <command>

# ip netns exec ns1 ip a

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

- add interface to a network namespace

# ip link add veth-a type veth peer name veth-b

# ip link

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: enp0s5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP mode DEFAULT group default qlen 1000

link/ether 00:1c:42:bd:b1:f2 brd ff:ff:ff:ff:ff:ff

17: veth-b@veth-a: <BROADCAST,MULTICAST,M-DOWN> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether b6:72:79:57:5d:77 brd ff:ff:ff:ff:ff:ff

18: veth-a@veth-b: <BROADCAST,MULTICAST,M-DOWN> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 12:82:23:2d:50:5d brd ff:ff:ff:ff:ff:ff

# ip link set veth-b netns ns1

# ip netns exec ns1 ip link

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

17: veth-b@if18: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether b6:72:79:57:5d:77 brd ff:ff:ff:ff:ff:ff link-netnsid 0

- assign IP address to veth interface

# ip addr add 10.0.0.1/24 dev veth-a

# ip link set veth-a up

# ip link

# ip netns exec ns1 ip addr add 10.0.0.2/24 dev veth-b

# ip netns exec ns1 ip link set dev veth-b up

# ip netns exec ns1 ip link

# ping 10.0.0.2

PING 10.0.0.2 (10.0.0.2) 56(84) bytes of data.

64 bytes from 10.0.0.2: icmp_seq=1 ttl=64 time=1.96 ms

64 bytes from 10.0.0.2: icmp_seq=2 ttl=64 time=0.045 ms

64 bytes from 10.0.0.2: icmp_seq=3 ttl=64 time=0.050 ms

64 bytes from 10.0.0.2: icmp_seq=4 ttl=64 time=0.044 ms

^C

--- 10.0.0.2 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 41ms

rtt min/avg/max/mdev = 0.044/0.525/1.964/0.830 ms

¶ 2. Traditional VM network type

| Network Type | VMware | Virtual Box |

|---|---|---|

| brideg | With bridged networking, the virtual machine has direct access to an external Ethernet network. The virtual machine must have its own IP address on the external network. If your host system is on a network and you have a separate IP address for your virtual machine (or can get an IP address from a DHCP server), select this setting. Other computers on the network can then communicate directly with the virtual machine. | This is for more advanced networking needs, such as network simulations and running servers in a guest. When enabled, Oracle VM VirtualBox connects to one of your installed network cards and exchanges network packets directly, circumventing your host operating system's network stack. |

| NAT | With NAT, the virtual machine and the host system share a single network identity that is not visible outside the network. Select NAT if you do not have a separate IP address for the virtual machine, but you want to be able to connect to the Internet. | The Network Address Translation (NAT) service works in a similar way to a home router, grouping the systems using it into a network and preventing systems outside of this network from directly accessing systems inside it, but letting systems inside communicate with each other and with systems outside using TCP and UDP over IPv4 and IPv6. |

| HOST-Only | Host-only networking provides a network connection between the virtual machine and the host system, using a virtual network adapter that is visible to the host operating system. | This can be used to create a network containing the host and a set of virtual machines, without the need for the host's physical network interface. Instead, a virtual network interface, similar to a loopback interface, is created on the host, providing connectivity among virtual machines and the host. |

| None | Do not configure a network connection for the virtual machine. | This is as if no Ethernet cable was plugged into the NIC. |

¶ Anatomy of Docker Networking

¶ Network drivers of Docker

Docker’s networking subsystem is pluggable, using drivers. Several drivers exist by default, and provide core networking functionality:

-

bridge: The default network driver. If you don’t specify a driver, this is the type of network you are creating. Bridge networks are usually used when you need multiple containers to communicate on the same Docker host. There are some differences betweenuser-defined bridgesandthe default bridge:- User-defined bridges provide automatic DNS resolution between containers.

- User-defined bridges provide better isolation.

- Containers can be attached and detached from user-defined networks on the fly.

- Each user-defined network creates a configurable bridge.

- Linked containers on the default bridge network share environment variables.

# docker run -itd --name b-nginx nginx 8b2cb1358266bd386d3428f654e1fb8ee6c8fe6773e2d42229bcc0ec66fd2e9f # docker inspect b-nginx [ { ... "EndpointID": "b93c896084be44552e04d2364d155e19af3f307fb8d9aa04872cd27e8bbf5e9a", "Gateway": "172.17.0.1", "GlobalIPv6Address": "", "GlobalIPv6PrefixLen": 0, "IPAddress": "172.17.0.2", "IPPrefixLen": 16, "IPv6Gateway": "", "MacAddress": "02:42:ac:11:00:02", "Networks": { "bridge": { "IPAMConfig": null, "Links": null, "Aliases": null, "NetworkID": "b22dfcac021950145c20dac884e6579908814608d1a9e7d15afb7a8fcfeb6069", "EndpointID": "b93c896084be44552e04d2364d155e19af3f307fb8d9aa04872cd27e8bbf5e9a", "Gateway": "172.17.0.1", "IPAddress": "172.17.0.2", "IPPrefixLen": 16, "IPv6Gateway": "", "GlobalIPv6Address": "", "GlobalIPv6PrefixLen": 0, "MacAddress": "02:42:ac:11:00:02", "DriverOpts": null } } } ] -

host: For standalone containers, remove network isolation between the container and the Docker host, and use the host’s networking directly.# docker run --name h-nginx -itd --network host nginx 1c863ae6e9c47040a3a9d14175d5d434d8d40c4bda14f0668f00bb9f4c62d313 # docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 1c863ae6e9c4 nginx "/docker-entrypoint.…" 4 seconds ago Up 3 seconds h-nginx # netstat -lntp Active Internet connections (only servers) Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 6000/nginx: master tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 318/sshd tcp6 0 0 :::80 :::* LISTEN 6000/nginx: master -

none: For this container, disable all networking. Usually used in conjunction with a custom network driver. none is not available for swarm services. See disable container networking.# docker run --name none_network -itd --network none busybox Unable to find image 'busybox:latest' locally latest: Pulling from library/busybox 009932687766: Pull complete Digest: sha256:afcc7f1ac1b49db317a7196c902e61c6c3c4607d63599ee1a82d702d249a0ccb Status: Downloaded newer image for busybox:latest 07133f28f9f6b10a183a91435a85618c5ef19fd88fae87fb11db84dd24f8b915 # docker exec none_network sh -c 'ip a' 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever -

overlay: Overlay networks connect multiple Docker daemons together and enable swarm services to communicate with each other. You can also use overlay networks to facilitate communication between a swarm service and a standalone container, or between two standalone containers on different Docker daemons. This strategy removes the need to do OS-level routing between these containers. See overlay networks. Overlay networks are best when you need containers running on different Docker hosts to communicate, or when multiple applications work together using swarm services. -

ipvlan: IPvlan networks give users total control over both IPv4 and IPv6 addressing. The VLAN driver builds on top of that in giving operators complete control of layer 2 VLAN tagging and even IPvlan L3 routing for users interested in underlay network integration. See IPvlan networks. -

macvlan: Macvlan networks allow you to assign a MAC address to a container, making it appear as a physical device on your network. The Docker daemon routes traffic to containers by their MAC addresses. Using the macvlan driver is sometimes the best choice when dealing with legacy applications that expect to be directly connected to the physical network, rather than routed through the Docker host’s network stack. See Macvlan networks.

¶ Bridge Network Deep Dive

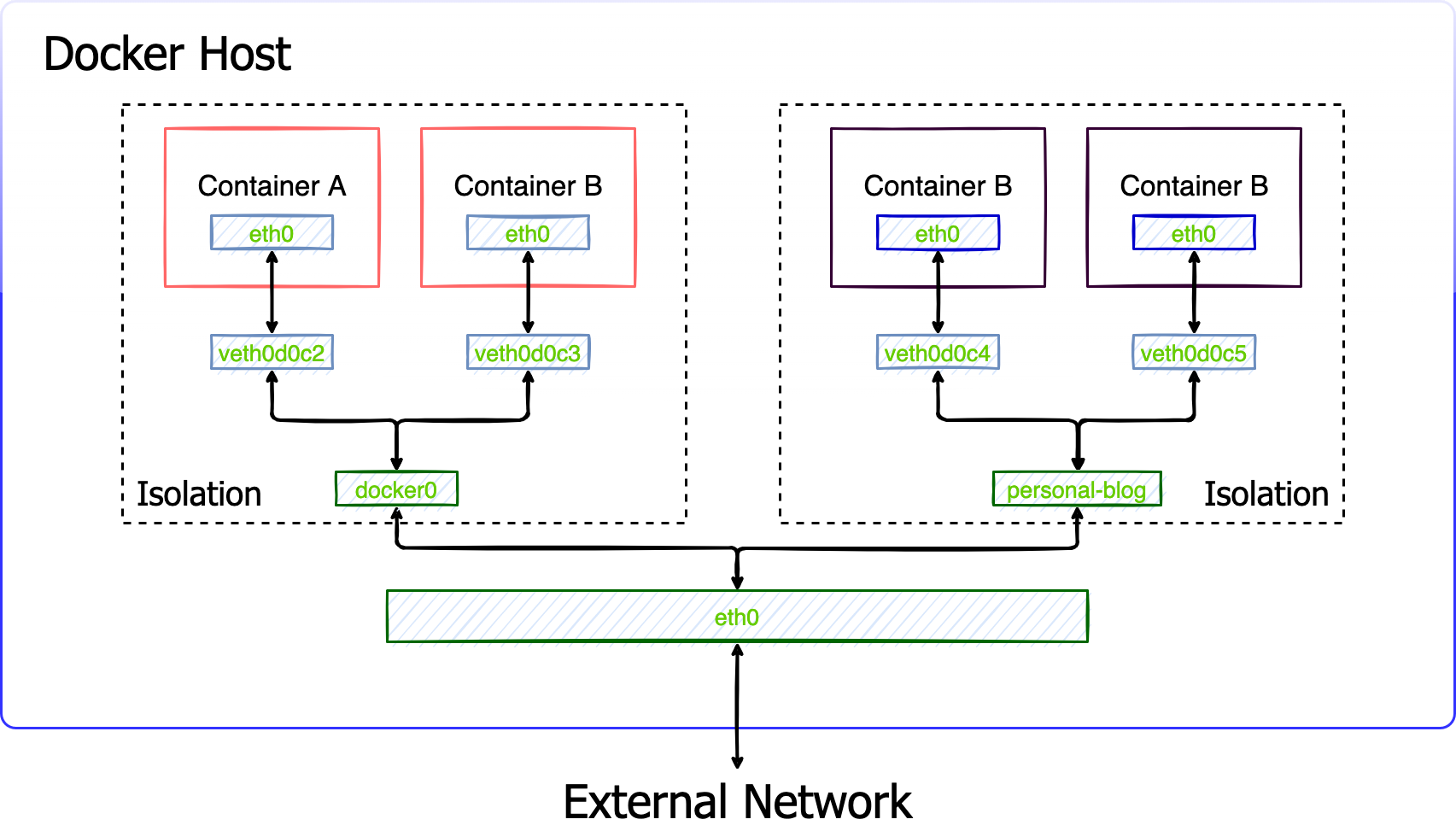

Docker uses many Linux namespace techologies for isolation, there are user namespace, process namespace, etc. For network isolation docker uses Linux network namespace technology, each docker container has its own network namespace, which means it has its own IP address, routing table, etc.

Typically, we can use the command ip netns list to show all network namespace in Linux like below output.

# ip netns list

#

But we can't get the container's network namespace. The reason is because docker deleted all containers network namespaces information from /var/run/netns. Docker write all container network namespace information in to /var/run/docker/netns.

# ls -lhrt /var/run/docker/netns

The bridge docker0 is docker default network driver, this bridge as a part of a host's network stack, we can using ifconfig/ip command to check its networking information on the host. The bridge docker0 status is DOWN when there are no containers running attach to it. After any container running with docker0, its status will change to UP automatically.

# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

#

# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: enp1s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether xx:xx:xx:xx:xx:xx brd ff:ff:ff:ff:ff:ff

inet 192.168.93.146/24 brd 192.168.93.255 scope global dynamic enp1s0

valid_lft 85598sec preferred_lft 85598sec

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:bd:1c:1e:d6 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

# docker run -itd --name mybox busybox

fc49b85b1fa567fe9589ae3ad75d46eda65d03d284bd384438c4a2298dac5dca

# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

fc49b85b1fa5 busybox "sh" 16 seconds ago Up 15 seconds mybox

# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: enp1s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether xx:xx:xx:xx:xx:xx brd ff:ff:ff:ff:ff:ff

inet 192.168.93.146/24 brd 192.168.93.255 scope global dynamic enp1s0

valid_lft 85466sec preferred_lft 85466sec

3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:bd:1c:1e:d6 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

5: veth0d0c2e2@if4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether 1a:97:0e:c2:b6:db brd ff:ff:ff:ff:ff:ff link-netnsid 0

When create a container, Docker will create a Veth Pair, one is in localhost, and another is in container's network. It seems like all containers plugging into a same switch(docker0) in the same docker networking, so containers can communicate with each other. With different networking, containers keep isolated. The basic network would be like below.

Container have the same subnet information with the same docker network. Let's check out the container's network information with docker. In this scenario, we will create a user-defined bridge named personal, create two containers attach to docker0, two containers attach to personal with ==busybox== image. And than, check connectivity of these four containers.

# docker network create --subnet 172.21.0.0/16 --ip-range 172.21.240.0/20 personal

93e6e22b983d6a92db3e86b1a05be1006c3926be008123b45cad96487368aef4

# docker network ls

NETWORK ID NAME DRIVER SCOPE

49cd8fc95af9 bridge bridge local

8afd246383f4 host host local

80155bcf6d01 none null local

93e6e22b983d personal bridge local

# docker run -itd --name mybox1_docker0 busybox

9e68961b6a33b63f56bcb11c4519c6343ec952e5679eb97b54995b81376a4394

# docker inspect --format '{{.NetworkSettings.IPAddress}}' mybox1_docker0

172.17.0.2

# docker run -itd --name mybox2_docker0 busybox

8cabae7a19f1d3c7f4951d1f57c4084d8367a7379c686d2c74b57e1dd8b72415

# docker inspect --format '{{.NetworkSettings.IPAddress}}' mybox2_docker0

172.17.0.3

# docker run -itd --name mybox1_personal --network personal busybox

dbd364cd7d9f63053f49832eaf97e63ebd5651549691f4d8a66c8a0f3798ebf8

# docker inspect --format '{{.NetworkSettings.Networks.personal.IPAddress}}' mybox1_personal

172.21.240.1

# docker run -itd --name mybox2_personal --network personal busybox

366188bb8c011e02a140adbe5fa118b5c67cc2529fdbf0049117b813134f60c5

# docker inspect --format '{{.NetworkSettings.Networks.personal.IPAddress}}' mybox2_personal

172.21.240.2

# docker exec mybox1_docker0 sh -c "ping -c 4 172.17.0.3"

PING 172.17.0.3 (172.17.0.3): 56 data bytes

64 bytes from 172.17.0.3: seq=0 ttl=64 time=0.073 ms

64 bytes from 172.17.0.3: seq=1 ttl=64 time=0.074 ms

64 bytes from 172.17.0.3: seq=2 ttl=64 time=0.111 ms

64 bytes from 172.17.0.3: seq=3 ttl=64 time=0.107 ms

--- 172.17.0.3 ping statistics ---

4 packets transmitted, 4 packets received, 0% packet loss

round-trip min/avg/max = 0.073/0.091/0.111 ms

# docker exec mybox1_docker0 sh -c "ping -c 4 172.21.240.2"

PING 172.21.240.2 (172.21.240.2): 56 data bytes

--- 172.21.240.2 ping statistics ---

4 packets transmitted, 0 packets received, 100% packet loss

# docker exec mybox1_personal sh -c "ping -c 4 172.21.240.2"

PING 172.21.240.2 (172.21.240.2): 56 data bytes

64 bytes from 172.21.240.2: seq=0 ttl=64 time=0.094 ms

64 bytes from 172.21.240.2: seq=1 ttl=64 time=0.063 ms

64 bytes from 172.21.240.2: seq=2 ttl=64 time=0.062 ms

64 bytes from 172.21.240.2: seq=3 ttl=64 time=0.068 ms

--- 172.21.240.2 ping statistics ---

4 packets transmitted, 4 packets received, 0% packet loss

round-trip min/avg/max = 0.062/0.071/0.094 ms

# docker exec mybox1_personal sh -c "ping -c 4 172.17.0.3"

PING 172.17.0.3 (172.17.0.3): 56 data bytes

--- 172.17.0.3 ping statistics ---

4 packets transmitted, 0 packets received, 100% packet loss

# The busybox image can't resolv container name to IP, because the /etc/nsswitch.conf is emtry, append "hosts: files dns" to /etc/nsswitch.conf to enable resolve container name to IP.

¶ Host Network Deep Dive

In host mode, the container will use the host network namespace, there is no Veth Pair. That means containers has the same ip/mac address as the host, container exposes the listened port directly to the host network stack, with all its security implications.

# docker run -itd --name host-nginx --network host nginx

1ce5ae5fbe345b9fcf70dff9dbae816085f19042b6bf8ef3751807f4511f9e0a

# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: enp1s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether xx:xx:xx:xx:xx:xx brd ff:ff:ff:ff:ff:ff

inet 192.168.93.146/24 brd 192.168.93.255 scope global dynamic enp1s0

valid_lft 57837sec preferred_lft 57837sec

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:bd:1c:1e:d6 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

18: br-93e6e22b983d: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:e0:17:8d:5e brd ff:ff:ff:ff:ff:ff

inet 172.21.240.0/16 brd 172.21.255.255 scope global br-93e6e22b983d

valid_lft forever preferred_lft forever

# netstat -lntp

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 5129/nginx: master

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 313/sshd

tcp6 0 0 :::80 :::* LISTEN 5129/nginx: master

# curl -I 192.168.93.146

HTTP/1.1 200 OK

Server: nginx/1.21.6

Date: Tue, 22 Feb 2022 18:40:37 GMT

Content-Type: text/html

Content-Length: 615

Last-Modified: Tue, 25 Jan 2022 15:03:52 GMT

Connection: keep-alive

ETag: "61f01158-267"

Accept-Ranges: bytes

¶ Expose Container

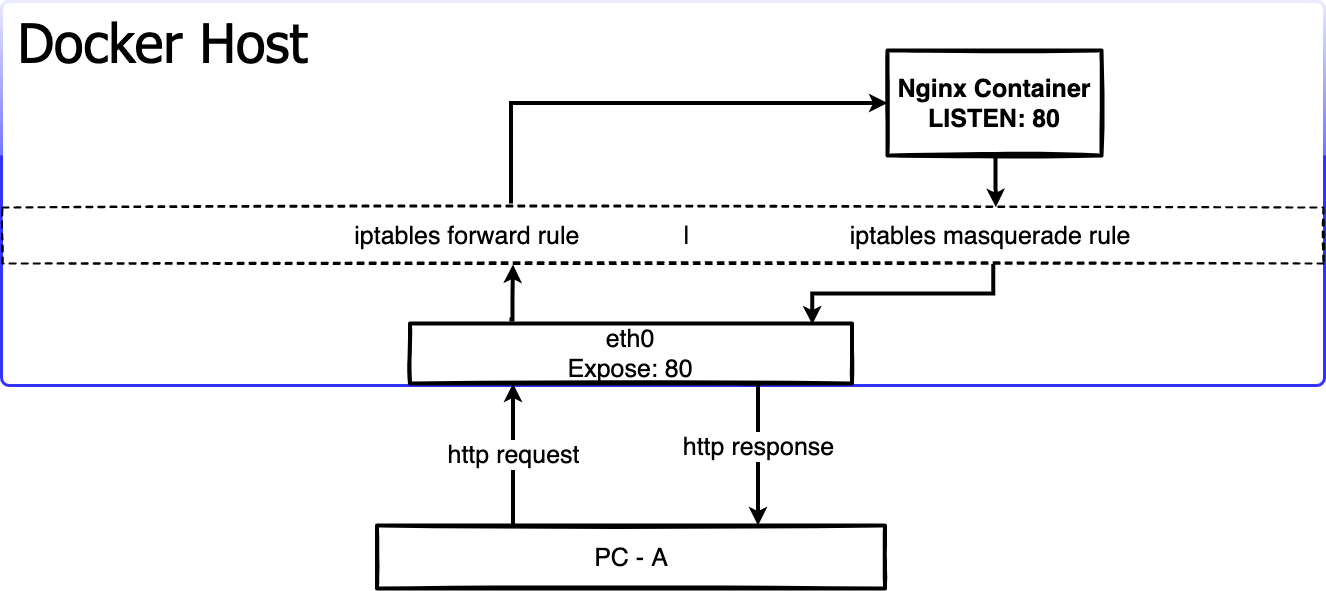

The Docker containers can make connections to the outside world, because docker create a masquerade iptables rule that let container connect to IP address in the outside world, but the outside world can't connect to containers by default. If the docker host as a server to provide a web service, the container should be can connect from the outside world. To tackle this problem, we need to expose the container. Docker will create the rule via iptables to forward the traffic from host to the container.

Suppose that we create a nginx container and expose it, let's check it out how the container was exposed.

# docker run -itd --name my-nginx -p 80:80 nginx

7e23c445ea0d28943a2e1cb76f4db337b51041779ca782f1eb4f971d333f83fe

# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

7e23c445ea0d nginx "/docker-entrypoint.…" 5 seconds ago Up 4 seconds 0.0.0.0:80->80/tcp my-nginx

# docker inspect --format '{{.NetworkSettings.IPAddress}}' my-nginx

172.17.0.2

# netstat -lntp

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 5444/docker-proxy

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 313/sshd

# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: enp1s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether xx:xx:xx:xx:xx:xx brd ff:ff:ff:ff:ff:ff

inet 192.168.93.146/24 brd 192.168.93.255 scope global dynamic enp1s0

valid_lft 84525sec preferred_lft 84525sec

3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:bd:1c:1e:d6 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

18: br-93e6e22b983d: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:e0:17:8d:5e brd ff:ff:ff:ff:ff:ff

inet 172.21.240.0/16 brd 172.21.255.255 scope global br-93e6e22b983d

valid_lft forever preferred_lft forever

30: vethebc6b97@if29: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether 6a:7c:4e:d7:4f:5e brd ff:ff:ff:ff:ff:ff link-netnsid 0

The docker host listen on 80 port via docker-proxy process. let's check the iptables NAT table status. There is a DNAT rule in Chain DOCERK create by docker, forward all traffic come from 80 port to 172.17.0.2:80(nginx container).

# iptables -nL -t nat

Chain PREROUTING (policy ACCEPT)

target prot opt source destination

DOCKER all -- 0.0.0.0/0 0.0.0.0/0 ADDRTYPE match dst-type LOCAL

Chain INPUT (policy ACCEPT)

target prot opt source destination

Chain POSTROUTING (policy ACCEPT)

target prot opt source destination

MASQUERADE all -- 172.21.0.0/16 0.0.0.0/0

MASQUERADE all -- 172.17.0.0/16 0.0.0.0/0

MASQUERADE tcp -- 172.17.0.2 172.17.0.2 tcp dpt:80

Chain OUTPUT (policy ACCEPT)

target prot opt source destination

DOCKER all -- 0.0.0.0/0 !127.0.0.0/8 ADDRTYPE match dst-type LOCAL

Chain DOCKER (2 references)

target prot opt source destination

RETURN all -- 0.0.0.0/0 0.0.0.0/0

RETURN all -- 0.0.0.0/0 0.0.0.0/0

DNAT tcp -- 0.0.0.0/0 0.0.0.0/0 tcp dpt:80 to:172.17.0.2:80

The outside world PC-A send a http request to docker host 80 port, and get the http respone information.

❯ curl -I 192.168.93.146

HTTP/1.1 200 OK

Server: nginx/1.21.6

Date: Tue, 22 Feb 2022 20:02:01 GMT

Content-Type: text/html

Content-Length: 615

Last-Modified: Tue, 25 Jan 2022 15:03:52 GMT

Connection: keep-alive

ETag: "61f01158-267"

Accept-Ranges: bytes

¶ Overlay Network Deep Dive

Coming soon...