¶ Kubernetes Pods

What is a Pod?

The shared context of a Pod is a set of Linux namespaces, cgroups, and potentially other facets of isolation - the same things that isolate a Docker container. Within a Pod's context, the individual applications may have further sub-isolations applied.

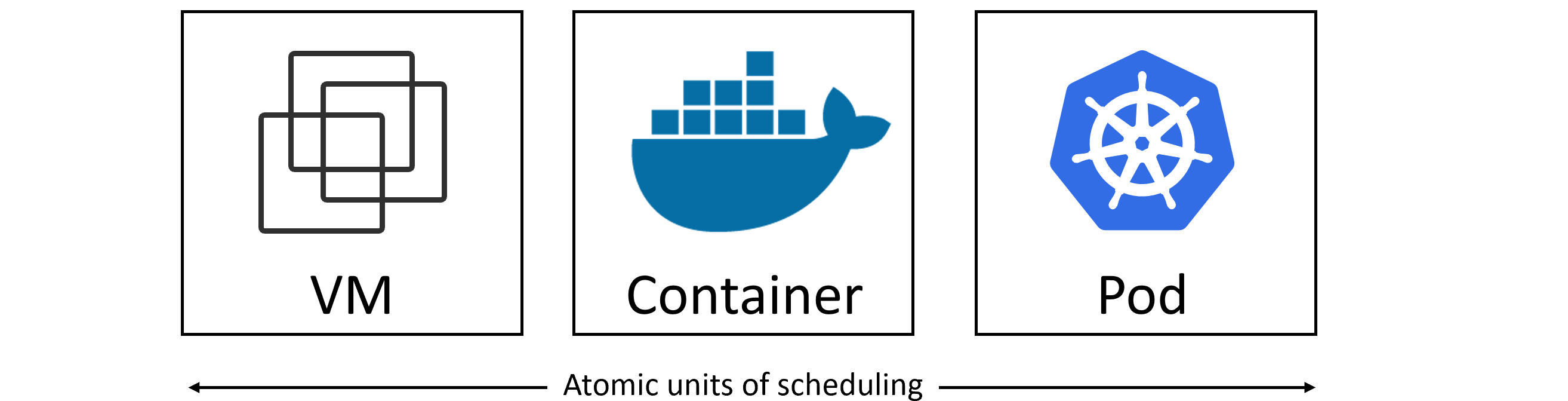

VMs vs. Pods vs. Containers

The atomic unit of scheduling in the virtualization world is the virtual machine (VM). In the Docker world, the atomic unit is the container. In the Kubernetes world, the atomic unit is the Pod. From a footprint perspective, pods are a tiny bit bigger than a container, but a lot smaller than a VM.

Digging a bit deeper, a Pod is a shared environment for one or more containers. By putting both containers inside the same Pod, you ensure that they are scheduled to the same node and share the same excution environment[1].

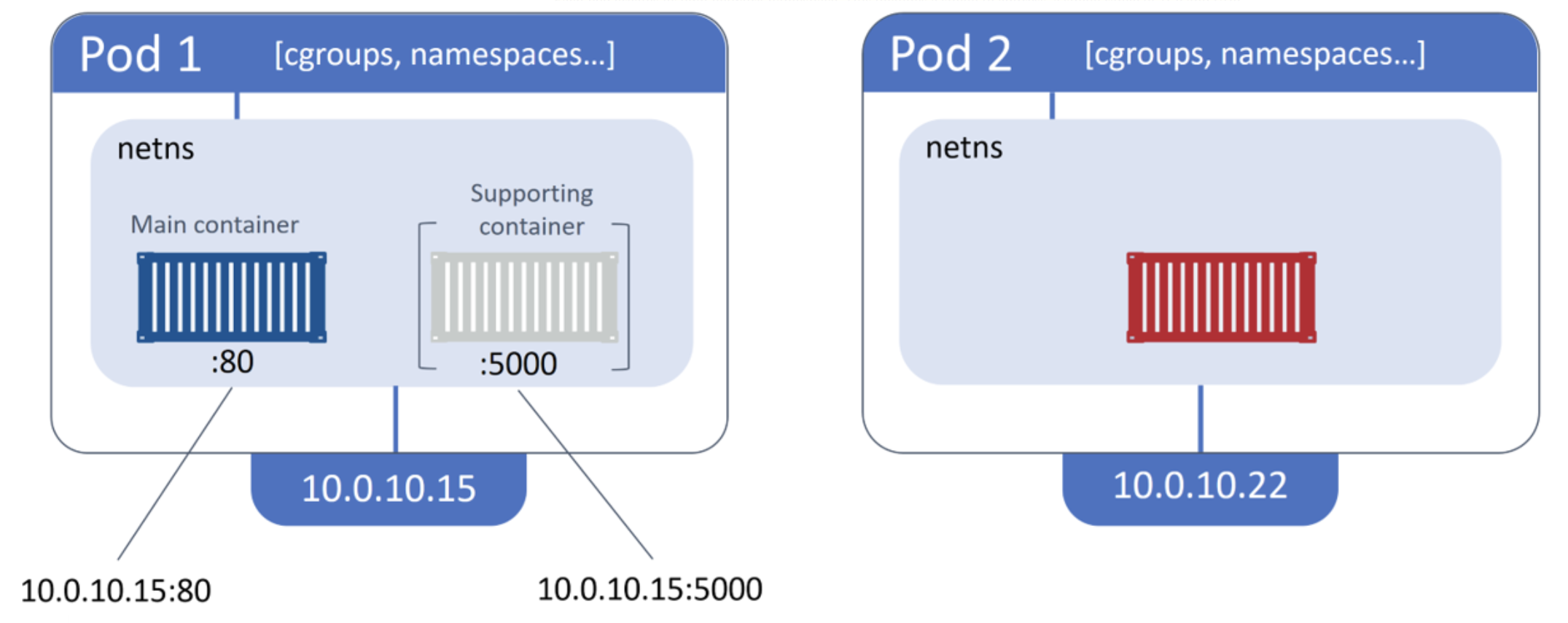

¶ The networking of a Pod

Each Pod creates its own network namespace. This includes a single IP address, a single range of TCP and UDP ports, and a single routing table. The figure below shows two Pods, each with its onw IP. Even though one of them is a multi-container Pod, is still only gets a single IP.

In the figure above, external access to the containers in Pod 1 is achieved via the IP address of the Pod, coupled with the port of the container you wish to reach. For example, 10.0.10.15:80 will get you to the main container. Container-to-container communication works via the Pod’s localhost adapter and port number. For example, the main container can reach the supporting container via localhost:5000.

¶ The cgroups of a Pod

At a high level, Control Groups (cgroups) are a Linux kernel technology that prevents individual containers from consuming all of the available CPU, RAM, and IOPS on a node. Individual containers have their own cgroup limits. This means it’s possible for two containers in the same Pod to have their own set of cgroup limits. This is a powerful and flexible model.

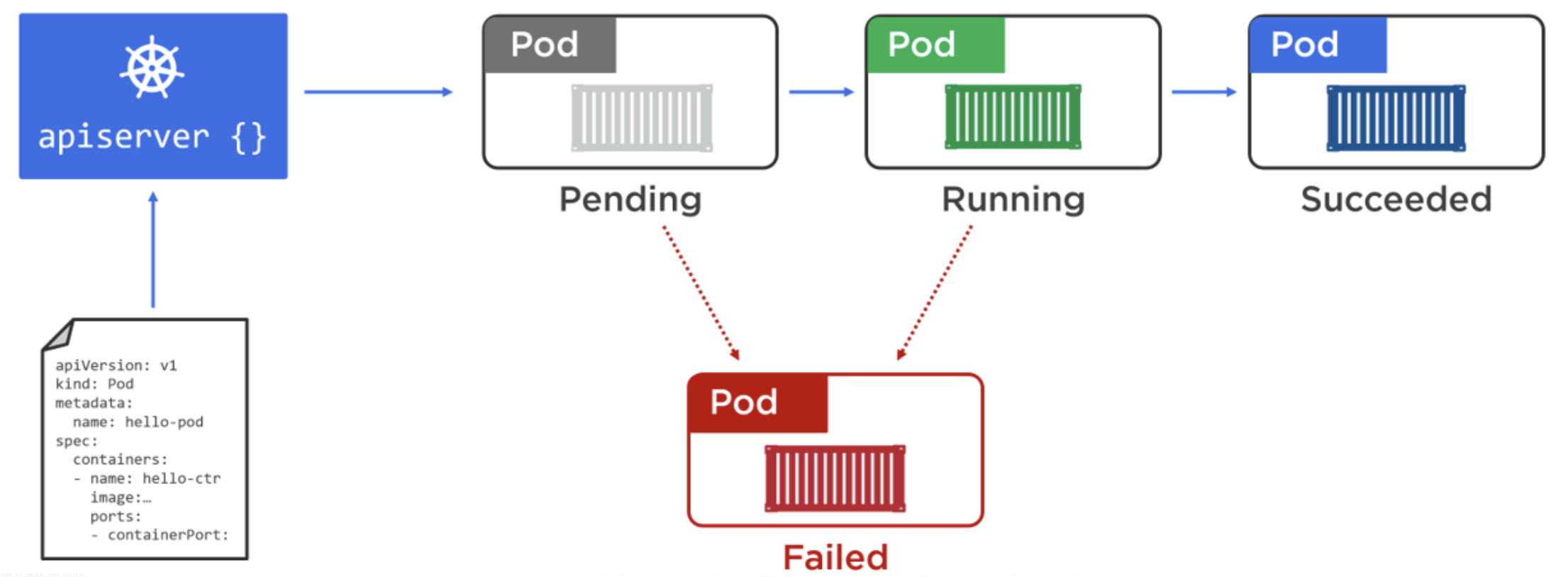

¶ The Lifecycle of a Pod

The lifecycle of a typical Pod goes something like this: you define it in a YAML manifest file and POST the manifest to the API server. Once there, the contents of the manifest are persisted to the cluster store as a record of intent (desired state), and the Pod is scheduled to a healthy node with enough resources. Once it’s scheduled to a node, it enters the pending state while the container runtime on the node downloads images and starts any containers.

A Pod's status field is a PodStatus object, which has a phase field. Here are the possible values for phase:

| Value | Description |

|---|---|

| Pending | The Pod has been accepted by the Kubernetes cluster, but one or more of the containers has not been set up and made ready to run. This includes time a Pod spends waiting to be scheduled as well as the time spent downloading container images over the network. |

| Running | The Pod has been bound to a node, and all of the containers have been created. At least one container is still running, or is in the process of starting or restarting. |

| Succeeded | All containers in the Pod have terminated in success, and will not be restarted. |

| Failed | All containers in the Pod have terminated, and at least one container has terminated in failure. That is, the container either exited with non-zero status or was terminated by the system. |

| Unknown | For some reason the state of the Pod could not be obtained. This phase typically occurs due to an error in communicating with the node where the Pod should be running. |

¶ Manager of Pod

Before jump in to the manifest files, we need to know what is the declarative model and imperative model.

The imperative model is where you issue long lists of platform-specific commands to build things. For example, you want to create a pod to run your application in kubernetes. You will make a long lists of commands like:

# kubectl create service clusterip my-svc --clusterip="None" -o yaml --dry-run=client | kubectl set selector --local -f - 'environment=qa' -o yaml | kubectl create -f -

# kubectl run nginx --image=nginx --restart=Never -l environment=qa

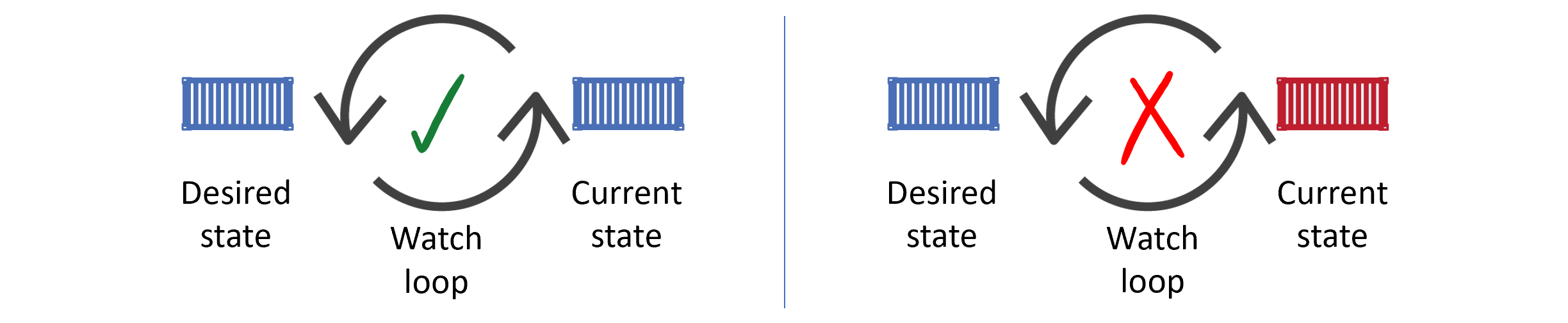

Not only is the declarative model a lot simpler than long scripts with lots of imperative commands, it also enables self-healing, scaling, and lends itself to version control and self-documentation. It does this by telling the cluster how things should look. If they stop looking like this, the cluster notices the discrepancy and does all of the hard work to reconcile the situation.

In Kubernetes, the declarative model works like this:

- Declare the desired state of an application (microservice) in a manifest file.

- POST the manifest file to the API server

- Kubernetes stores it in the cluster store as the application’s desired state

- Kubernetes implements the desired state on the cluster

- Kubernetes implements watch loops to make sure the current state of the application doesn’t vary from the desired state

¶ Manifest file of Pod

This is a simple manifest file of pod. Let’s step through what the YAML file is describing.

apiVersion: v1

kind: Pod

metadata:

name: my-web

labels:

zone: test

version: v1

spec:

containers:

- name: aaa-web

image: nginx:1.21.6

ports:

- containerPort: 80

Straight away we can see four top-level resources:

- apiVersion: The usual format for

apiVersionis<api-group>/<version>, pods are defined in a special API group, called the core group, which omits theapi-grouppart. - kind: The

kindfield tells Kubernetes the type of object being deployed. - metadata: This section is where you attach a name and labels. These help you identify the object in the cluster, as well as create loose couplings between different objects.

- spec: This section is where you define the containers that will run in the Pod. Such as which image use for the container, which port to expose, etc.

¶ Working with Pods

Save the manifest file as pod_nginx.yml in your current directory and, then, use the following kubectl command to POST the manifest to the API server to create the pod.

$ cat pod_nginx.yml

apiVersion: v1

kind: Pod

metadata:

name: my-web

labels:

zone: test

version: v1

spec:

containers:

- name: aaa-web

image: nginx:1.21.6

ports:

- containerPort: 80

$ kubectl apply -f pod.yml

pod/my-web created

Although the Pod is showing as created, it might not be fully deployed and available yet. This is because it takes time to pull the image. Run a kubectl get pods command to check the status.

$ kubectl get pods --watch

my-web 0/1 Pending 0 0s

my-web 0/1 Pending 0 0s

my-web 0/1 ContainerCreating 0 0s

my-web 1/1 Running 0 1s

$ kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

my-web 1/1 Running 0 2m24s 10.244.3.40 cerek-knode3 <none> <none>

$ kubectl describe pods my-web

Name: my-web

Namespace: default

Priority: 0

Node: cerek-knode3/192.168.93.63

Start Time: Fri, 13 May 2022 10:01:35 -0700

Labels: version=v1

zone=test

Annotations: <none>

Status: Running

IP: 10.244.3.40

IPs:

IP: 10.244.3.40

Containers:

aaa-web:

Container ID: docker://37fe02bc6320fbd76c9071fa217f6f6342ed7c7e8cbda15e72be490b70d0fc9e

Image: nginx:1.21.6

Image ID: docker-pullable://nginx@sha256:19da26bd6ef0468ac8ef5c03f01ce1569a4dbfb82d4d7b7ffbd7aed16ad3eb46

Port: 80/TCP

Host Port: 0/TCP

State: Running

Started: Fri, 13 May 2022 10:01:36 -0700

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-z7p49 (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

kube-api-access-z7p49:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 6m29s default-scheduler Successfully assigned default/my-web to cerek-knode3

Normal Pulled 6m29s kubelet Container image "nginx:1.21.6" already present on machine

Normal Created 6m29s kubelet Created container aaa-web

Normal Started 6m29s kubelet Started container aaa-web

Shared execution environment means that the Pod has a set of resources that are shared by every container that is part of the Pod. These resources include IP addresses, ports, hostnames, sockets, memory, and volumes. ↩︎